Philipp

Asynchronous Web Scraping Using Python

Scraping data from the web is a common task - whether it’s fetching data from an API or collecting information from thousands of web pages. In this post I’m going to describe how to do so a lot faster - asynchronously.

So what does asynchronous mean?

Many programs run synchronously. For example, a simple for loop:

words = ['apple', 'orange', 'banana']

for word in words:

count +=1

print(word)

will go in order, or synchronously. Apple will be printed first, then orange, then banana.

The same can be done with web requests.

Synchronous Requests

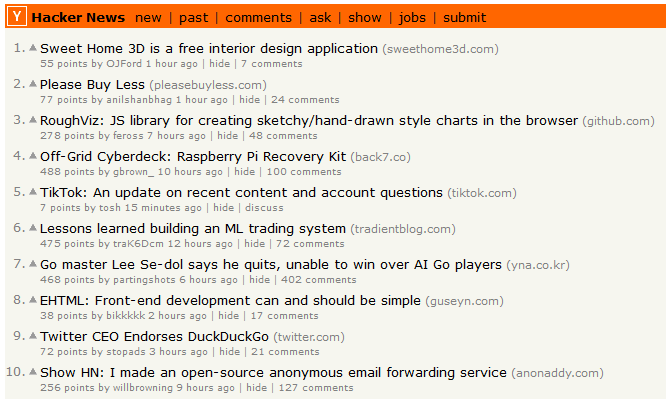

In this example, using the requests library to generate requests, BeautifulSoup4 to parse the page, and Pandas to store the data in a dataframe, I fetch, parse, and store the latest stories, their score, and the site the story was posted from, from YCombinator’s Hacker News.

from bs4 import BeautifulSoup as bs

import pandas as pd

import requests

import time

# Returns the page

def fetch_res(url):

return requests.get(url)

# Url structure: root_url + numpages

root_url = "https://news.ycombinator.com/news?p="

numpages = 5

hds, links = [], []

# Generate links for us to fetch

for i in range(1,numpages+1):

links.append(root_url+str(i))

start_time = time.time()

# in every page, we fetch the headline, the score, and the site it originated from

for link in links:

page = fetch_res(link)

parsed = bs(page.content, 'html.parser')

headlines = parsed.find_all('a', class_='storylink')

scores = parsed.find_all('span', class_='score')

sitestr = parsed.find_all('span', class_='sitestr')

for a,b,c in zip(headlines, scores, sitestr):

hds.append([a.get_text(), int(b.get_text().split()[0]), c.get_text()])

print(time.time()-start_time,"seconds")

df = pd.DataFrame(hds)

df.columns = ['Title', 'Score', 'Site']

print(df.head(10))

This results in:

4.734636545181274 seconds

Title Score Site

0 Sweet Home 3D is a free interior design applic... 54 sweethome3d.com

1 Please Buy Less 74 pleasebuyless.com

2 RoughViz: JS library for creating sketchy/hand... 278 github.com

3 Off-Grid Cyberdeck: Raspberry Pi Recovery Kit 488 back7.co

4 TikTok: An update on recent content and accoun... 7 tiktok.com

5 Lessons learned building an ML trading system 475 tradientblog.com

6 Go master Lee Se-dol says he quits, unable to ... 468 yna.co.kr

7 EHTML: Front-end development can and should be... 38 guseyn.com

8 Twitter CEO Endorses DuckDuckGo 72 twitter.com

9 Show HN: I made an open-source anonymous email... 256 anonaddy.com

Which matches what’s currently on the front page:

So, fetching every story, score, and site for the last five pages of Hacker News took about five seconds. That’s pretty fast! But what if we increase the number of pages we want to scrape? Say, 25?

24.343989372253418 seconds

Increasing the number of pages by a multiple of 5 multiplied the time taken by roughly 5 as well. Interestingly, if we use time to inspect the runtime, we see the following:

:~$ time python3 sync_req.py

real 0m24.900s

user 0m3.634s

sys 0m0.672s

How do we interpret this?

• real: The actual time spent by the computer running the process from start to finish.

• user: This is cumulative core time spent in only executing the process.

• sys: This is cumulative core time spent during system-related tasks such as memory allocation.

It’s not necessary that user + sys time add up to real time. On a multicore system, since what is measured is cumulative core time, the user and/or sys time (as well as their sum) can actually exceed the real time.

That being said, we can now see that only three or so seconds were spent actually spent processing the data - the rest of the time was spent waiting for I/O. How can we improve this?

Asynchronous Requests

When scraping sites as opposed to, for example, fetching data from a financial API, the order of the data doesn’t necessarily matter. This means we can submit our requests in any order (asynchronously) and still receive the same data.

In this example, I’m using the same code as above but with a slightly different change.

We import ThreadPoolExecutor, which is “an Executor that uses a pool of threads to execute calls asynchronously”. Simply put, if your CPU has multiple cores, the Executor will execute your calls across multiple threads instead of synchronously as above.

from bs4 import BeautifulSoup as bs

from concurrent.futures import ThreadPoolExecutor as PoolExecutor

import requests

import pandas as pd

import time

# Returns the page

def fetch_res(url):

return requests.get(url)

# Url structure: root_url + numpages

root_url = "https://news.ycombinator.com/news?p="

numpages = 25

hds, links = [], []

# Generate links for us to fetch

for i in range(1,numpages+1):

links.append(root_url+str(i))

start_time = time.time()

with PoolExecutor(max_workers=4) as executor:

for page in executor.map(fetch_res, links):

parsed = bs(page.content, 'html.parser')

headlines = parsed.find_all('a', class_='storylink')

scores = parsed.find_all('span', class_='score')

sitestr = parsed.find_all('span', class_='sitestr')

for a,b,c in zip(headlines, scores, sitestr):

hds.append([a.get_text(), int(b.get_text().split()[0]), c.get_text()])

print(time.time()-start_time,"seconds")

df = pd.DataFrame(hds)

df.columns = ['Title', 'Score', 'Site']

print(df.head(10))

The difference is now, instead of iterating over the list of links to fetch, we create a thread pool of 4 threads (max_workers = 4), and distribute the links over the threads in the pool using executor.map.

Timing this with 25 pages as before yields us:

:~$ time python3 async_req.py

9.342963218688965 seconds

real 0m9.885s

user 0m2.033s

sys 0m0.711s

A significant improvement over our original 24 seconds, as time taken was cut by more than half! But, since I’m using a 2600x, which has 6 cores, we can increase the number of workers further to 12, yielding:

:~$ time python3 async_req.py

3.555405378341675 seconds

real 0m4.082s

user 0m1.602s

sys 0m0.710s

Our 12-thread asynchronous scrape took 3 seconds in comparison with our single-threaded scrape which took 24.

As a result, it’s pretty clear that using asynchronous methods can greatly speed up web scraping. Using multiple threads does not have to be limited to just scraping, however, as any task which can be performed asynchronously can be sped up in this way.

If you are interested, all the code used in this post is available on my GitHub: https://github.com/starovp/starovp-blog/tree/master/nov/asynchronous-web-scraping

References

- Real, User and Sys process time statistics

- concurrent.futures - Python 3.7.5 documentation